Royal Mail criticised as first-class stamp price rises to £1.80 despite ‘failing service’

Royal Mail has been criticised for announcing another hike in the cost of first- and second-class stamps while providing what Citizens Advice described as a “failing service”.From 7 April, the price of a first-class stamp will increase by 10p, or 6%, to £1.80. The cost of the second-class service is going up by 4p, or 5%, to 91p. Royal Mail blamed the need for price increases on the “continued rise in the cost of delivery for every letter”

US lost 92,000 jobs in February just before Trump joined Iran conflict

The US lost 92,000 jobs in February, an unexpected major slackening in the labor market that came just before Donald Trump threw the global economy into upheaval with his conflict in Iran.The unemployment rate edged up to 4.4% in February. In comparison, the US added a revised 126,000 jobs in January, far surpassing expectations of 70,000 jobs but still less than January 2025. Economists predicted an increase of 60,000 jobs added in February and a steady unemployment rate of 4

BP’s new boss will take home at least £11.7m this year, more than double her predecessor

The incoming chief executive of BP will take home at least £11.7m this year after joining the embattled oil company from a rival, more than double the pay packet earned by her predecessor.Meg O’Neill will join BP from the Australian oil company Woodside Energy in April as the company’s first external hire to its top job, and the first woman to serve as chief executive at the 117-year-old oil major.The former ExxonMobil executive will earn a base salary of £1.6m, narrowly above the salary paid to her predecessor, Murray Auchincloss, but the bulk of her pay packet will be payments made by BP to compensate O’Neill for the share awards she was in line to receive over the next five years in her previous role

Rail passengers warned over six-day Easter shutdown on west coast mainline

Rail passengers planning to travel over the Easter break face disrupted journeys owing to a six-day shutdown on Britain’s biggest intercity line.Engineering work means there will be no west coast mainline services between London Euston and Milton Keynes from Good Friday (3 April) to Wednesday 8 April.There will also be no service between Preston and Lancaster on the line on 4-5 April.Network Rail said the work, which is part of a £400m project to increase the reliability of the line, was vital and that bank holidays were chosen for such works because they were among the least busy times to close.“The four-day period at Easter gives us a valuable opportunity to complete projects that simply can’t be delivered during a normal weekend,” said Jake Kelly, the body’s regional director for the north-west and central region

‘We’re powerless … and hoping nothing hits us’: trapped on a tanker as Iran war escalates

Thousands of seafarers are trapped on tankers in the Gulf after the strait of Hormuz was effectively closed to shipping by the escalating war on Iran.The Guardian spoke to a crew member on one of the stranded tankers that typically ferries vast quantities of oil from the Middle East to ports around the world.“When [Donald] Trump said Iran had 10 days to agree to his deal or bad things would happen, I did the math and thought we might get stuck here. And we did,” said the seafarer.From a cabin below deck, they explained how the crew watched explosions light up the sky as they loaded the vessel with crude oil at an industrial complex in the Gulf

US grants waiver to allow India to buy Russian oil amid Iran war

The US has temporarily allowed India to buy Russian oil currently stuck at sea in an effort to keep global supplies flowing and temper further price increases.The US treasury has issued a 30-day waiver allowing India to buy Russian oil, having previously imposed heavy sanctions related to the war in Ukraine.“To enable oil to keep flowing into the global market, the treasury department is issuing a temporary 30-day waiver to allow Indian refiners to purchase Russian oil,” the treasury secretary, Scott Bessent, said in a statement posted to social media on Thursday. “This stopgap measure will alleviate pressure caused by Iran’s attempt to take global energy hostage.”In August the US president, Donald Trump, imposed an additional 25% import tariff on India over its purchase of cheap Russian oil, arguing that New Delhi’s purchases were undermining US sanctions and helpingVladimir Putin bankroll the invasion of Ukraine

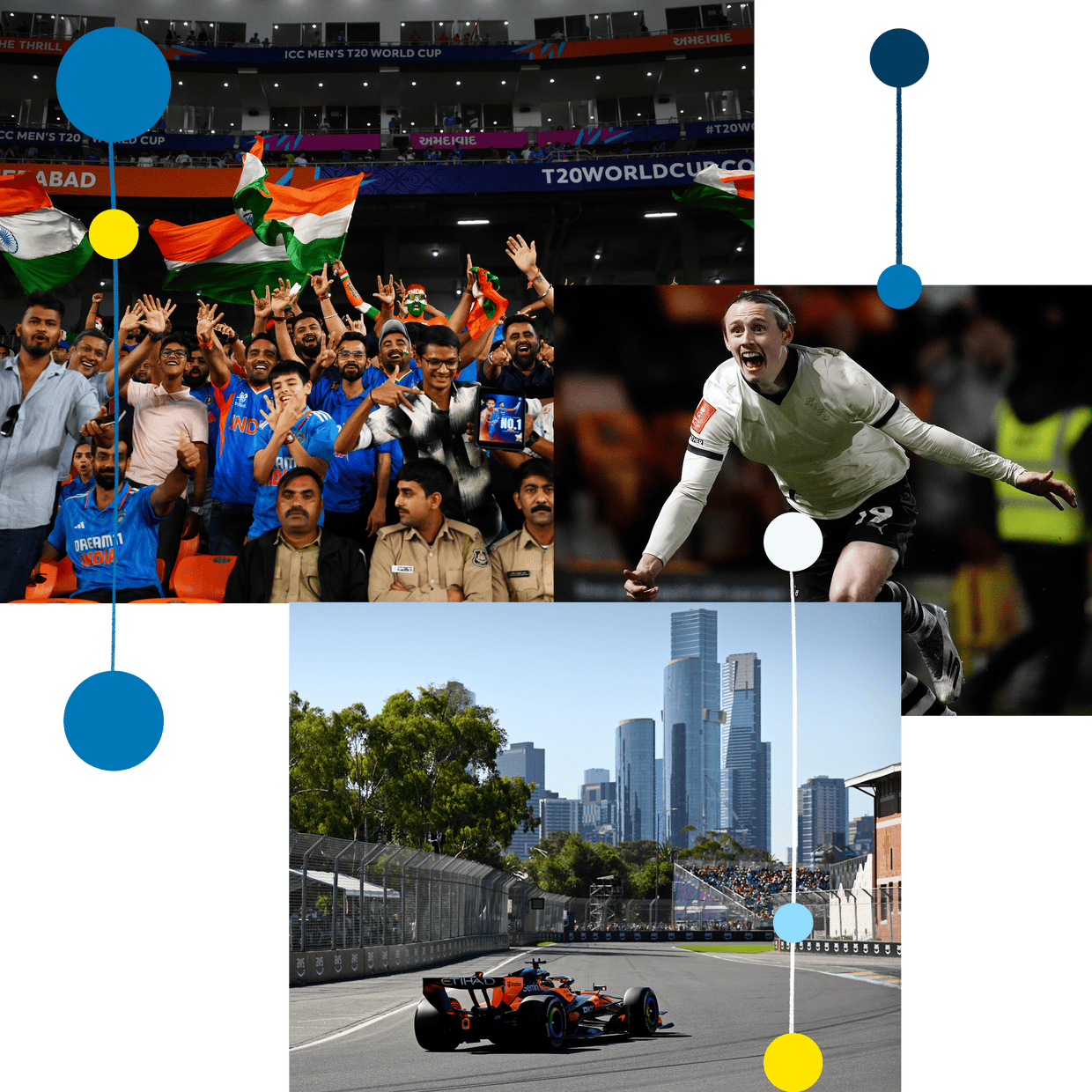

T20 World Cup final, Six Nations, FA Cup and F1 returns – follow with us

Nine speeding tickets and counting: Myles Garrett and the illusion of invincibility | Lee Escobedo

Stakes sky high for England as Italy eye Six Nations upset for the ages | Robert Kitson

Naoya Inoue to face Junto Nakatani in historic Tokyo Dome megafight

Scotland sense chance against France to end cycle of brilliance and despair | Michael Aylwin

World Cup exit ‘a tough pill to swallow’ for England’s Jacob Bethell after maiden T20 ton