Crypto billionaire Christopher Harborne no longer interested in Reform-Tory pact

Christopher Harborne, the ultra-wealthy political donor who has given £12m to Reform UK, has told the Guardian he is “no longer” interested in a Reform-Conservative pact before the next general election.A possible collaboration between Reform and the Conservative party had been an important aspect of discussions about donations between Harborne and senior figures including Nigel Farage, sources familiar with the conversations said.The Thailand-based cryptocurrency investor had previously wanted Farage to keep an open mind about a pact between the two parties, the same sources added.This position has changed, however. Harborne said in an emailed statement: “In the past this was possibly the case, but it is no longer the case

Nigel Farage to discuss Chagos Islands deal at Mar-a-Lago dinner with Donald Trump tonight – as it happened

Downing Street has denied there has been a U-turn on UK government policy on Iran after Britain’s deputy prime minister suggested this morning that the UK could take part on strikes on Iranian targets. Royal Air Force jets could legally strike Iranian missile sites being used to attack British interests in the Middle East, David Lammy said in a BBC interview earlier today.David Lammy has said it is an “absolute travesty” that details were leaked from a top secret national security meeting on the US-Israel attacks on Iran and has called for an investigation. There were reports last weekend of cabinet splits at a national security council meeting, which is protected by the Official Secrets Act, over allowing the US to use British bases for the strikes against Iran.Royal Air Force jets could legally strike Iranian missile sites being used to attack British interests in the Middle East, Lammy also said this morning

Starmer is facing a cocktail of dissent that is growing ever more potent

But for the Iran crisis, Labour’s first major policy announcement since the party’s calamitous defeat in the Gorton and Denton byelection would have been arguably the biggest political story of the week.Shabana Mahmood, the home secretary, pressed ahead with what is intended to be the party’s full-throated answer to the competition it faces from Reform UK as she declared an end to permanent refugee status and the removal of state support from some asylum seekers.It immediately put her on a collision course with many Labour backbenchers, but it also left the party’s soft-left majority, who had been pushing for a more progressive offering in recent weeks, asking: “Is that it?”The victory speech in Gorton and Denton by Hannah Spencer, the newly minted Green party MP, contained the sort of lines that many on Labour’s backbenches yearn to hear their leader utter, or even nod towards. Hard-working people had become “sick of making other people rich” and now wondered what their toil would yield, said the young plumber.Yet while Keir Starmer’s troops expected at least some red meat this week from their party’s leadership to counter the Green challenge for economically squeezed traditional Labour voters, his instinctive response was to send a letter to MPs in which he repeated an attack line that sought to paint Zack Polanski’s party as extremist

Defence secretary accuses Tory and Reform MPs of ‘unpatriotic’ behaviour

The defence secretary, John Healey, has accused opposition politicians of deliberately undermining the UK’s relationship with Donald Trump, saying it was “unpatriotic” for MPs to seek to turn the US against Keir Starmer.Healey, speaking to the Guardian at RAF Akrotiri in Cyprus, which was hit by a drone strike over the weekend, said he had been shocked at the way politicians like Nigel Farage had sought to “undermine” the UK’s relationship with the US.The Conservatives and Reform UK have criticised the British decision not to allow the US to use UK bases for offensive strikes against Iranian targets, though they will be used to help defend UK interests and allies in the region from Iranian retaliatory attacks.But Healey said he had been shocked by the extent to which senior MPs had sought to curry favour with the US president by undermining the position of the UK government – not just on the Iran attacks but also over the Chagos Islands deal.Kemi Badenoch, the Tory leader, and Farage, have both praised Trump for his opposition – albeit fluctuating – to the government’s Chagos plan, which the US president criticised when apparently frustrated with the UK over other issues

Kemi is wrong about everything. Which is almost an achievement in itself | John Crace

Cast your mind forward 10 years or so. Long after Kemi Badenoch has been sacked as Tory party leader without even getting to contest an election. Long after she has been fired from a sinecure in an HR firm for falling out with all her colleagues. Long after she was dismissed from a Tory thinktank for being unable to think. Long after she was forced to take early retirement

Best way forward for Iran would be negotiated settlement, says Starmer

Keir Starmer has said the conflict engulfing the Middle East could continue “for some time” as he insisted the best way forward in the longer term was a negotiated settlement with Iran.The prime minister said the UK was doing “everything we can” to de-escalate the situation, a clear contrast to the US president, who is focused on regime change and has said it was “too late” for Tehran to negotiate.He defended his decision to block initial offensive strikes by the US and Israel at the weekend, saying he stood by his judgment and denying it had damaged the special relationship.Starmer has faced some criticism from Gulf states and Cyprus for not doing enough to protect regional allies and British citizens there from Iranian strikes. He has also been subject to personal attacks from Trump, including that he was “not Winston Churchill”

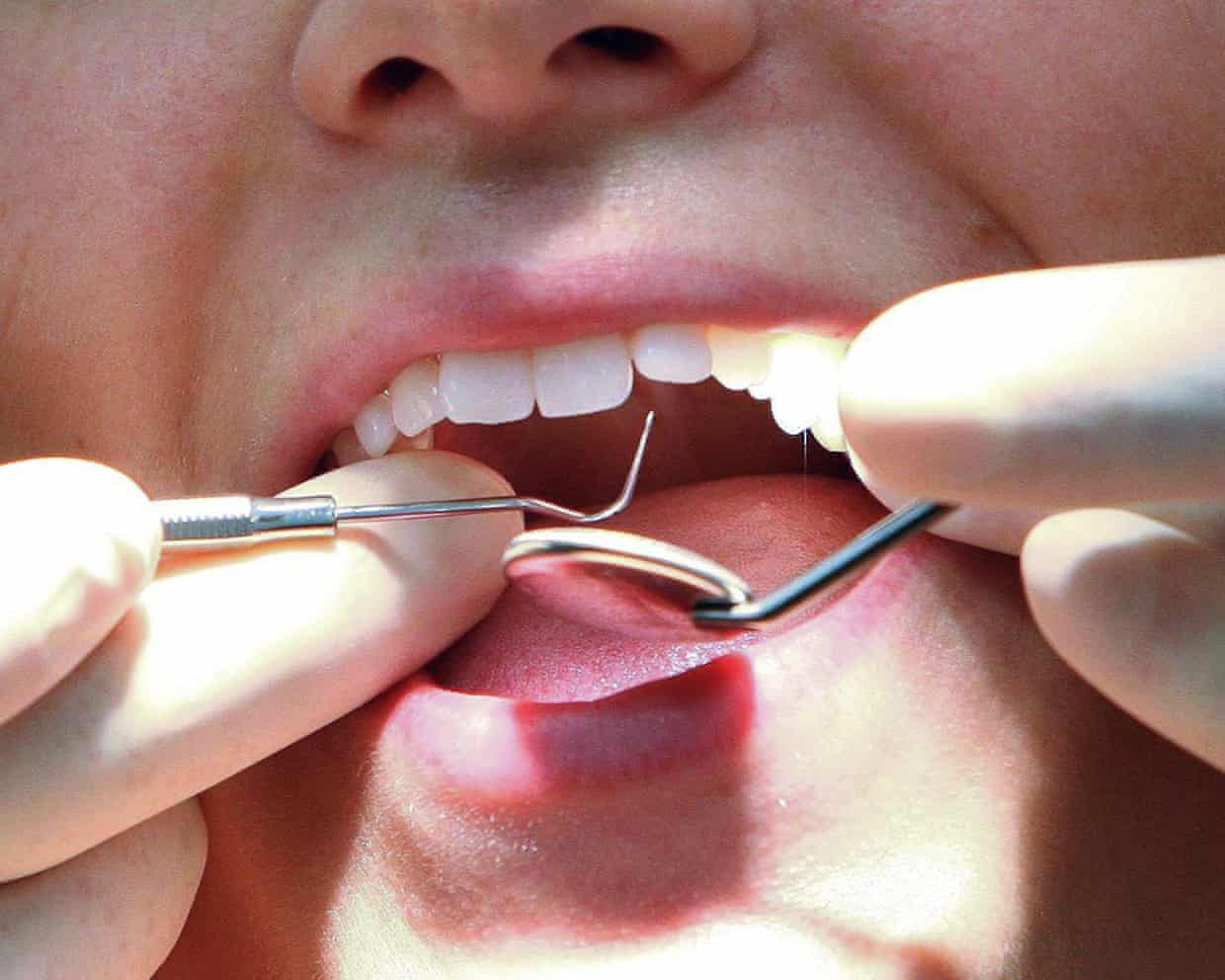

UK’s private dentistry market faces review after price jumps of more than 23%

‘A space of their own’: how cancer centres designed by top architects can offer hope

UK government ‘effectively allowed’ child sexual abuse, campaigners say

Circumcision classed as potentially harmful practice in new CPS guidance

Scientists laud potentially life-changing drug for children with resistant form of epilepsy

More than 220m children will be obese by 2040 without drastic action, report warns