Actor reaches settlement with Old Vic theatre over Kevin Spacey assault claims

An actor who alleged that he was sexually assaulted by Kevin Spacey has reached a settlement with the Old Vic theatre.Ruari Cannon, who waived his right to anonymity, was an actor at the Old Vic during Spacey’s tenure as artistic director.He claimed that Spacey assaulted him at a theatre after-party at the Savoy hotel and at the Old Vic’s theatre bar on a separate occasion. Spacey has denied the allegations.In a statement, the Old Vic said: “Ruari Cannon and the Old Vic have reached a mutually agreed out-of-court settlement, the precise terms of which are confidential

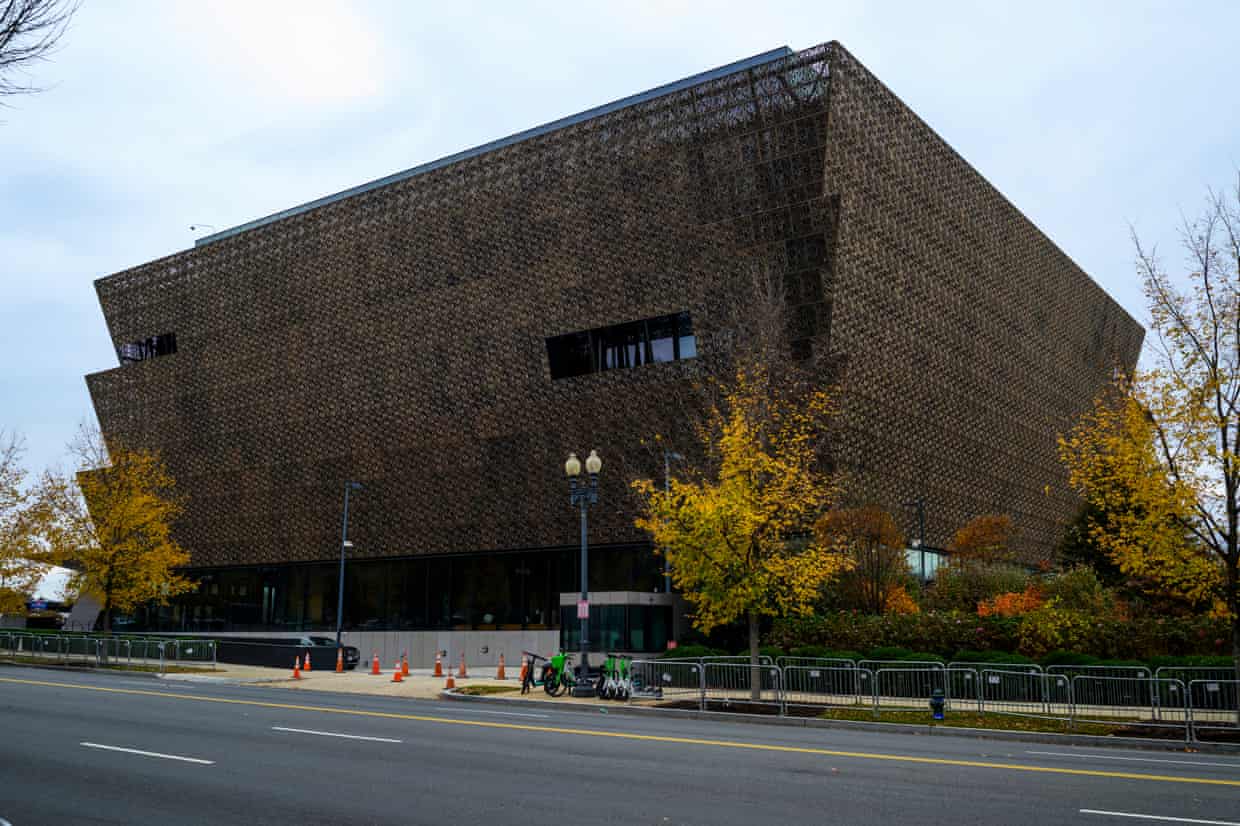

‘Excellence’: Smithsonian exhibit celebrates HBCUs amid attacks on Black history

At a time when museums and colleges are facing uncertainty and there is a push to limit the acknowledgment of Black history, the Smithsonian’s National Museum of African American History and Culture (NMAAHC) and its five partner historically Black colleges and universities (HBCUs) have launched a new exhibit to put Black history and Black archives at the forefront.At the Vanguard: Making and Saving History at HBCUs, on view at the NMAAHC now through 19 July, was developed as a part of the History and Culture Access Consortium (HCAC). After At the Vanguard leaves the NMAAHC, it will go on tour to each of the universities, along with other locations that request it.The exhibit, which is composed of archival materials and collections from each of the five HBCUs of the partnership – Jackson State University, Florida A&M University, Tuskegee University, Clark Atlanta University and Texas Southern University – is the culmination of years of work by the consortium. With more than 100 objects on display at the NMAAHC, the collection includes rare items, such as one of the only existing color videos of George Washington Carver, the agricultural scientist and inventor, from Tuskegee University

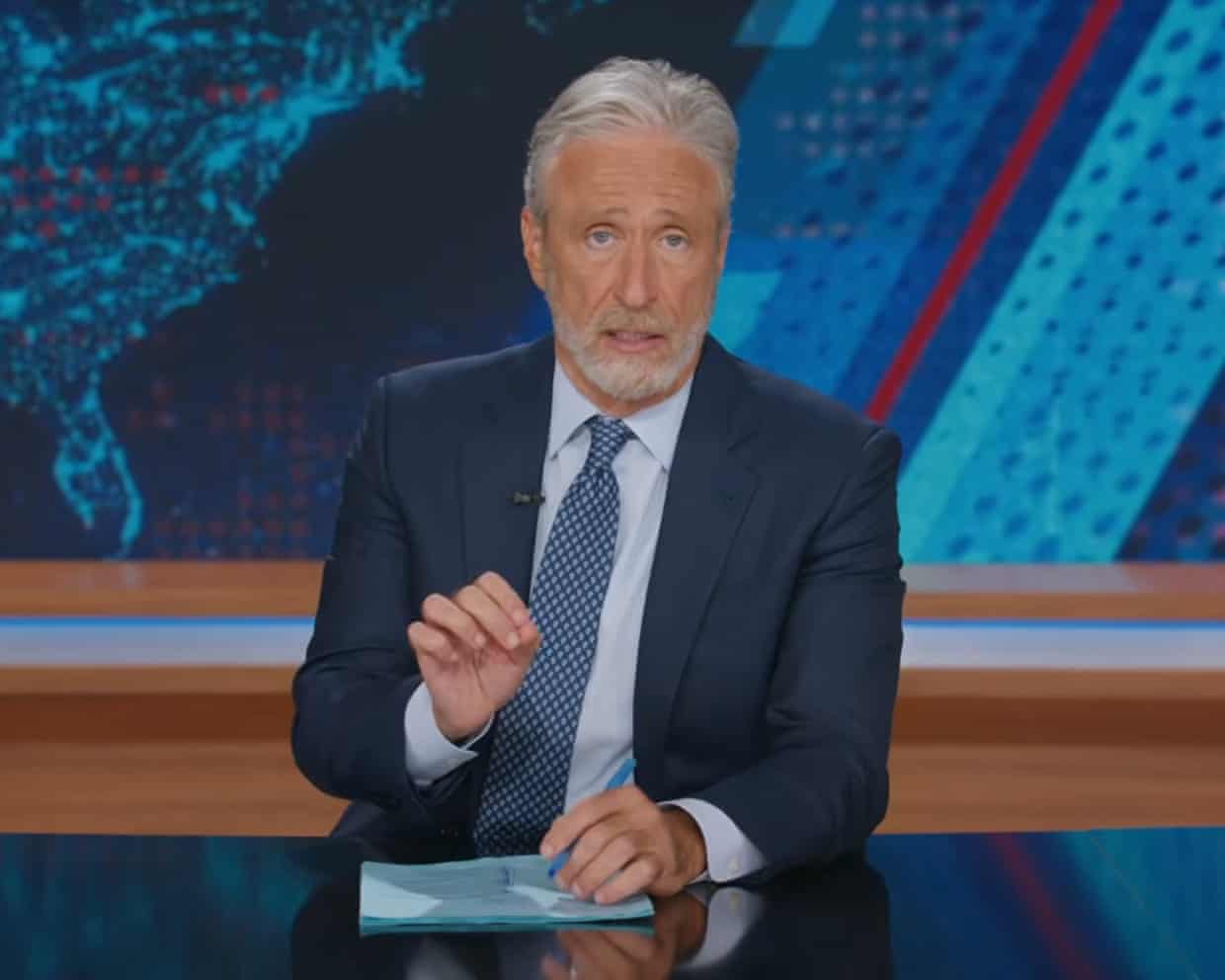

Jon Stewart on US attacks in Iran: ‘A war with no clear purpose, no end in sight’

Late-night hosts delved into the new US regime-change war in the Middle East, after Donald Trump directed the US military to bomb Iran in conjunction with Israel.Jon Stewart opened The Daily Show on Monday in a daze, after Iran state media confirmed that US and Israeli forces killed its supreme leader, Ayatollah Ali Khamenei, over the weekend. The host joked that, for the surprise occasion and chaos that followed, he needed to bring back “a 20-year recurring segment” titled “Mess O’Potamia”.“America, apparently, had to start an entire war to kill an 86-year-old man in ill health and not wait – I don’t know – three weeks to let saturated fat do its thing,” he joked.He then played a clip of Trump, wearing his USA hat, announcing the so-called “Operation Epic Fury” against Iran from his luxury golf course in Florida

‘My guitar was mangled – like my life!’ Goo Goo Dolls on how they made epic ballad Iris

‘I’m grateful to Taylor Swift, and others who have covered it, for introducing the song to a new generation. Three billion streams on Spotify is astonishing!’I was going through a divorce and living in a hotel in West Hollywood when my manager said Warner Brothers were seeking songs for the movie City of Angels. They already had U2, Peter Gabriel and Alanis Morissette, so I thought getting a track on there would draw attention to us. Warners showed me the film and it was like Wim Wenders’ Wings of Desire. They wanted a song for the scene where the angel – played by Nicolas Cage – decides to become human to be with the woman he loves

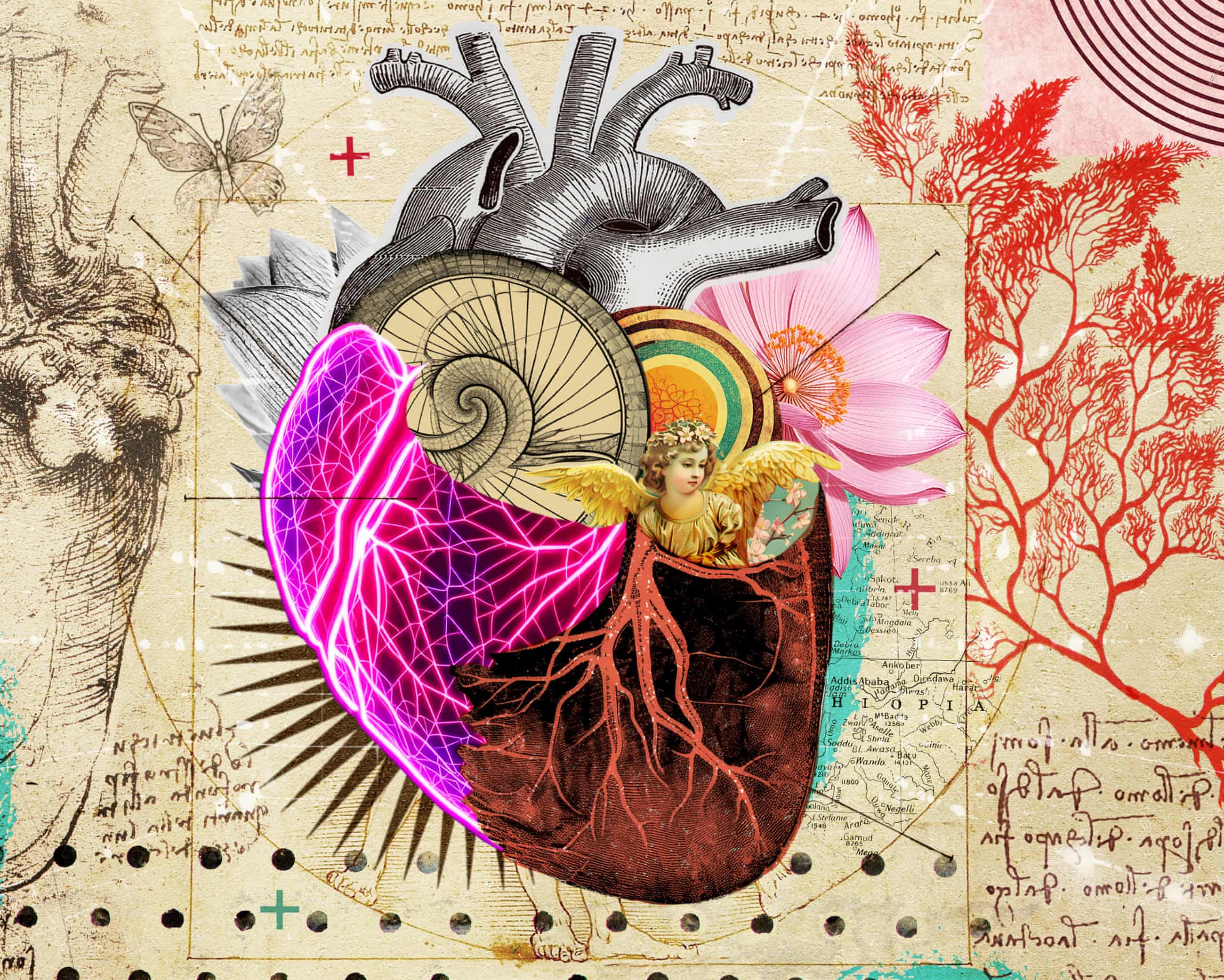

My cultural awakening: Leonardo da Vinci made me rethink surgery – I’ve since mended more than 3,000 hearts

For one heart surgeon, seeing the Renaissance artist’s anatomical drawings gave him a natural understanding of the body that was often overlooked in modern medical scienceIf you’d asked my teenage self, growing up in a small village in Shropshire, what I wanted to do with my life, I would have talked about art and music long before I spoke of scalpel blades and operating theatres. As an 18-year-old, I intended to go to art school, until my mother sat me down and told me rather bluntly that being an artist wouldn’t earn me much money. As she spoke, a surgical documentary flickered across the screen of the black-and-white television in our living room. I told her, half joking, that that was what I’d do instead. Which is how I ended up repeating my A-levels and fighting my way into medical school, where I qualified in 1975

The Guide #232: From documentary shock to Bafta acclaim – how the screen shaped our understanding of Tourette’s

The wildfire surrounding last week’s Bafta ceremony – where Tourette syndrome campaigner John Davidson involuntarily shouted a racial slur at actors Michael B Jordan and Delroy Lindo, and the BBC aired the moment – continues to rage. Criticisms have been levelled at, and investigations opened by, the Beeb and Bafta; hundreds of news stories and comment pieces have been devoted to the incident (if you read anything, make sure it’s this clear-eyed piece from Jason Okundaye, who was at the ceremony); and the climate on social media has been toxic, with much of the ire directed at Davidson himself. It’s an ire that is based on a complete misunderstanding of coprolalia, the form of Tourette syndrome (TS) that Davidson has, which results in the unintended and completely involuntary utterance of offensive or inappropriate remarks.There’s an unhappy irony at play here because Davidson, arguably more than any other person in Britain, has been responsible for raising awareness of TS. There’s an unfortunate symmetry, too, to the fact that the incident was shown on primetime BBC, because that was where Davidson was first brought to national attention as the subject of the landmark 1989 documentary John’s Not Mad

T20 World Cup final, Six Nations, FA Cup and F1 returns – follow with us

Nine speeding tickets and counting: Myles Garrett and the illusion of invincibility | Lee Escobedo

Stakes sky high for England as Italy eye Six Nations upset for the ages | Robert Kitson

Naoya Inoue to face Junto Nakatani in historic Tokyo Dome megafight

Scotland sense chance against France to end cycle of brilliance and despair | Michael Aylwin

World Cup exit ‘a tough pill to swallow’ for England’s Jacob Bethell after maiden T20 ton